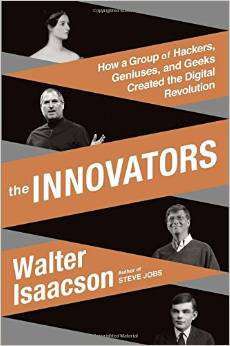

Book Review - The Innovators

January 05 2015Walter Isaacson’s The Innovators tells the story of the people responsible for the current digital world. I really enjoyed Walter Isaacson’s biography of Steve Jobs. This book doesn’t go nearly as deep, but it covers many more areas and is an enjoyable read. Effectively, it’s almost like the history book of computer engineering.

I was a dual-major computer engineering and electrical engineering student. As such, I took classes in information theory, semiconductor physics, and computer architecture, just to name a few. Over the course of these classes, I would learn about important figures indirectly, through concepts like information entropy (Claude Shannon), the transistor (William Shockley), and the Von Neumann Architecture (John von Neumann).

But those people were never real - I didn’t learn about Alan Turing’s latent homosexuality or William Shockley’s tendency to be a huge asshole (see below). The Innovators brings these people alive, telling us about how they were raised, what influenced them, and what work needed to happen before they made their scientific breakthroughs. I gave a copy of this book to my younger brother to read. He’s interested in possibly studying computer science in college. I think this book is a great complement to a CS/EE degree, and probably required reading for any curious mind.

The topics covered in this book span the major inventions and breakthroughs that are pervasive technologies in our world now. Isaacson starts with Lady Ada Lovelace, Charles Babbage, and the difference engine, noting that Lady Lovelace created the original vision for computer programming. Next, he covers all the disparate teams that had a hand in creating the first computer, capable of taking general instructions and even general programming. This chapter (and the next on programming these early computers) is probably the most interesting - there were so many people and teams all converging on the same idea, that vacuum tubes could be used as a transistor-like device to power a logical machine. It’s really interesting to see how their approaches differed and how they will end up being remembered.

Isaacson then covers the transistor and how this lead to the invention of the microchip. This ended up being my favorite story from the book. All electrical engineering and physics students learn about William Shockley and how he invented the transistor. What we didn’t learn is that two people working for Shockley, John Bardeen and Walter Brattain, actually arrived at the idea of using silicon for an on/off device before Shockley did. Shockley was so jealous of their success that he developed his own, superior, design a few months later based on the P-N junction. Shockley’s design was better, easier to manufacture, and later became the basis for microchips.

The success of the transistor only made Shockley worse as a person. He started his own company to produce transistors at scale and recruited some really talented young PhDs. He was such a terrible and abusive manager that a few of his talented recruits, known as the “traitorous eight”, left his firm and founded Fairchild Semiconductor. Fairchild became so successful, it later became the genesis for the area around Palo Alto being known as Silicon Valley. There are some amazing people in that traitorous eight, including Robert Noyce and Gordon Moore (Moore’s law), who later left Fairchild and started Intel. Another of the eight was Eugene Kleiner, who eventually founded the famous VC firm Kleiner Perkins (now KPCB). The traitorous eight is kind of the forerunner of the Paypal Mafia, with so many of its members founding successful companies later on.

The final few chapters are more modern and therefore the stories more well known. Isaacson details the birth of the internet, through ARPANET and the invention of packets and various internet communication protocols. Apple and Microsoft are featured prominently in the development of the personal computer and software, respectively. Finally, he ends with the new internet age and how browsers and search engines played a key role in our world today.

I definitely found the older stories more interesting and also knew less about them to start with. I think Isaacson covers a good mix of topics, but there are a few I’m disappointed he excluded. One is the role of information theory (more Claude Shannon!) and how important that was in the eventual development of mobile phones. Another is how businesses used technology (rather than just consumer) - I was hoping for an entire chapter on IBM and the mainframe. Finally, I’m obviously biased here, but I also would’ve liked to see some mention of the rising importance of cloud computing (this could’ve been a nice follow-up to the IBM mainframe chapter).

There are also some important lessons to learn, in terms of how huge innovations happen. One thing we notice right away is that most of the inventors were not just interested in science or technology - they also had interests in the arts. Ada Lovelace was Lord Byron’s daughter, and she spent her life both embracing that literary prestige and running away from it by studying math and working with Charles Babbage. Vannevar Bush, who was dean of engineering at MIT, founded Raytheon, and wrote the vision doc for the internet, loved the humanities growing up. He could quote Kipling, played the flute, loved symphonies, and read philosophy for pleasure. It’s not surprising that having well-rounded interests can lead to success, but it’s great to see it evident in some of the most important inventors.

A second major lesson is that collaboration is important. Nearly all the inventors described had a partner to work with. Lovelace worked for Babbage. Jobs and Wozniak, Gates and Allen. Brattain and Bardeen, who got to the idea of the silicon transistor before Shockley improved on it, had a great working relationship in which one was the physics theorist while the other would do the engineering and build a practical prototype. Alternatively, a figure like John Atanasoff, who theorized and even built one of the first computers, will not be remembered. He worked alone at Iowa State and didn’t have a team of people to help him with the practical realities of building a large mechanical machine. Consequently, while he wrote a great research paper, he could never get his model working.

The final major lesson is the importance of research, and also the funding of research, in the development of a country and an economy. Vannevar Bush wrote a proposal to President Harry Truman titled “Science, the Endless Frontier”. He completely nails the reason why research is so important. He wrote “A nation which depends upon others for its new basic scientific knowledge will be slow in its industrial progress and weak in its competitive position in world trade” and “Advances in science when put to practical use mean more jobs, higher wages, shorter hours, more abundant crops, more leisure for recreation, for study, for learning how to live without the deadening drudgery which has been the burden of the common man for past ages.” That is a very elegant way of saying that science and technology are important!

Bush’s proposal eventually led to the National Science Foundation, which gives research grants to academics and institutions for research. Crucially, a lot of important technology was developed with government funding, like ARPANET and the idea of sending packets, the direct ancestors of the modern internet. I’m not very interested in politics, but I do think that for America to maintain its position of leadership in the world, it needs to keep investing in research and development. As R&D increasingly starts shifting to private sectors (companies like Google are so important for software research), it means we need more open immigration policies to retain all these talented people that want to work in the United States.

The Innovators is an interesting and important enough book that I will try to reread it periodically, maybe once every five years. Maybe the next time I read it, I will come away with even more lessons learned.

comments powered by Disqus