Password Protecting an S3 Website

November 25 2013I previously wrote about how this site is hosted entirely on AWS, and all the content is in Amazon S3. I recently created two sub-sites, wedding.xingdig.com and engagement.xingdig.com that are also hosted on S3. These sites host hi-res pictures from my wedding and engagement event, and I like having it be something that is easily and globally accessible. This post talks about my process for setting these sites up, and how I’m able to get a level of password protection on the site simply by using some neat S3 features - web page redirect and bucket policies.

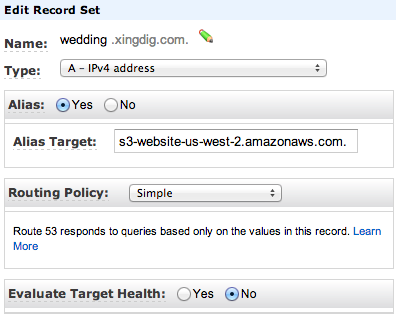

The first part is to create buckets for “wedding.xingdig.com” and “engagement.xingdig.com”. I already describe that in my earlier post. The same configurations with Route 53 apply. I simply created two new new record sets under my “xingdig.com” hosted zone. These record sets are aliases that tie back to my S3 region (I’m in us-west-2 in the example below).

For the site itself, I wrote a simple perl script to generate the html. Each page consists of several hundred pictures, so I’m only showing thumbnails. Even with just thumbnails, each page load is still downloading around 3-4 mb of content.

I used the built-in Automator tool on my macbook to generate the thumbnails. They are pretty low-fi, as their largest side is only 128 pixels. However, it was very simple, and I didn’t have to get an image manipulation tool such as ImageMagick. There’s a great guide of how to use Automator to generate thumbnails at this blog.

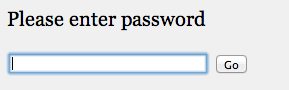

The part I’m most proud of is the password protection. When you visit either the wedding or the engagement pictures site, you get a prompt for a password.

The input box is a simple javascript that takes whatever was inputted and tries to go to that target. For instance, if you type in “password” in the box, it’ll try to go to wedding.xingdig.com/password. The javascript code for the input box is found below.

<form name="password_form" action="javascript:location.href =

window.document.password_form.page.value" style="margin:0;">

<div style="display:inline;">

<input type="text" name="page" autocorrect="off" autocapitalize="off" autofocus size="35">

<input type="submit" value="Go">

<noscript>

<div style="display:inline;color:#ff0000;

background-color:#ffff66; font:normal 11px tahoma,sans-serif;">

<br>Javascript is required to access this area. Yours seems to be disabled.</div>

</noscript>

</div>

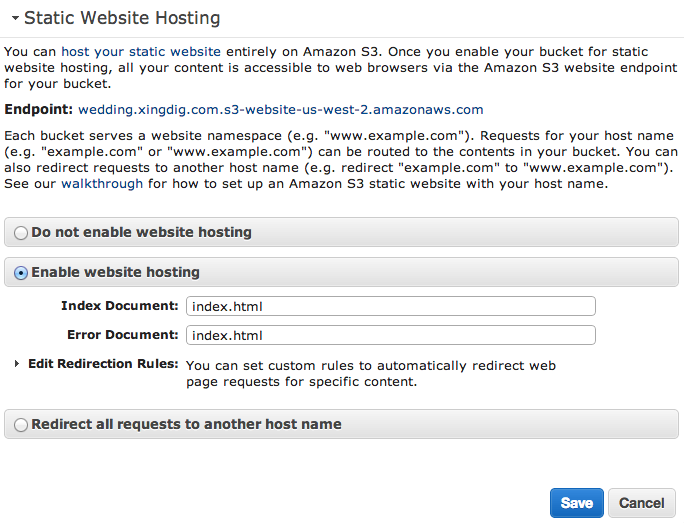

</form>The way the password protection works is that the correct password goes to a zero-byte object that is hosted in my S3 bucket. That object then redirects to the correct page that loads the pictures. If the password is incorrect, it will go to a page that doesn’t exist, and S3’s static website hosting rules kick in. To allow password retries, I configured the error document of my static website to go back to the opening index page.

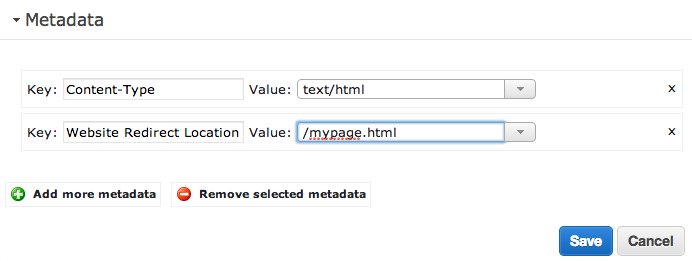

Setting up a redirect on the “correct” password is easy as well. On the S3 console, I simply added the Website Redirect Location metadata to the object. So, if the page you want to load on a successful password attempt is mypage.html, you would set up the redirect as below. Here is the link for the documentation on web page redirects.

The final piece is setting a bucket policy. This makes it so that even if someone knew the direct URL of your successful page (e.g., if it’s example.com/mypage.html), they still would have to enter a password.

Basically, I set a bucket policy that only allows access to my real content (and for the “password” object) if the http referer is also my site. This means, once you successfully enter a password, you can freely visit all the content and links because they come with a http referer of wedding.xingdig.com. Here is the link for the documentation on S3’s bucket policies.

Here is an example bucket policy for engagement.xingdig.com, which consists of one index.html page (the page that asks for a password), a password object (I use <password> for this), and a page for pictures that is titled “mypage.html” in the example below. I also made all my images public.

{

"Version": "2008-10-17",

"Id": "Policy1384199197545",

"Statement": [

{

"Sid": "Allow index.html for all requesters",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::engagement.xingdig.com/index.html"

}.

{

"Sid": "Allow <password> for all requesters",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::engagement.xingdig.com/<password>"

},

{

"Sid": "Allow mypage.html if redirected to",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::engagement.xingdig.com/mypage.html",

"Condition": {

"StringLike": {

"aws:Referer": [

"engagement.xingdig.com/*",

"*"

]

},

"Null": {

"aws:Referer": false

}

}

}

]

}The only downside here is that I’m at risk of how browsers behave in passing along the http referer. Each browser caches differently, and most of them will keep passing that http referer for a little while after visiting the page. You can usually see this by testing the difference between a regular refresh (something like F5 on Chrome) vs. a hard refresh (hold down Ctrl and press F5).

For the purposes of password protecting my personal pictures, this does the job for me. If anyone does happen to stumble upon those pages, they’ll have to know the password to initiate anything on the site.

And, as a reminder, all of this is done entirely with Amazon S3 and Amazon Route 53! There is absolutely no dynamic, server-side content, so my S3 bill still only comes to a few pennies a month.

comments powered by Disqus